Comprehensive analysis of different commercial auto-segmentation tools for multi-site OAR contouring

PO-1636

Abstract

Comprehensive analysis of different commercial auto-segmentation tools for multi-site OAR contouring

Authors: Gerd Heilemann1, Martin Buschmann1, Wolfgang Lechner1, Vincent Moritz Dick1, Franziska Eckert1, Martin Heilmann1, Harald Herrmann1, Mercedes Hudelist-Venz1, Matthias Moll1, Johannes Knoth1, Stefan Konrad1, Inga Malin Simek1, Christopher Thiele1, Zaharie Alexandru-Teodor1, Dietmar Georg1, Joachim Widder1, Trnkova Petra1

1Medical University of Vienna, Department of Radiation Oncology, Vienna, Austria

Show Affiliations

Hide Affiliations

Purpose or Objective

To analyze the performance of commercial organs-at-risk (OAR) auto-segmentation software for clinical use and to describe the methodology for clinical validation.

Material and Methods

AI-based contours were automatically generated by two commercial software tools on CT images for representative sets of at least 15 patients for brain, head-and-neck, thorax, abdomen, as well as female and male pelvis, and benchmarked against respective manual experts’ contours. Following metrics were statistically analyzed for more than 25 different OARs: 1) geometric (Dice similarity coefficient (DSC), Hausdorff distance (HD)); 2) dosimetric (relative dose differences of mean and near maximum dose D1%); 3) qualitative metrics: physician rating from 1 = poor quality to 4 = good quality/clinically acceptable, rated at least by two physicians per structure.

Results

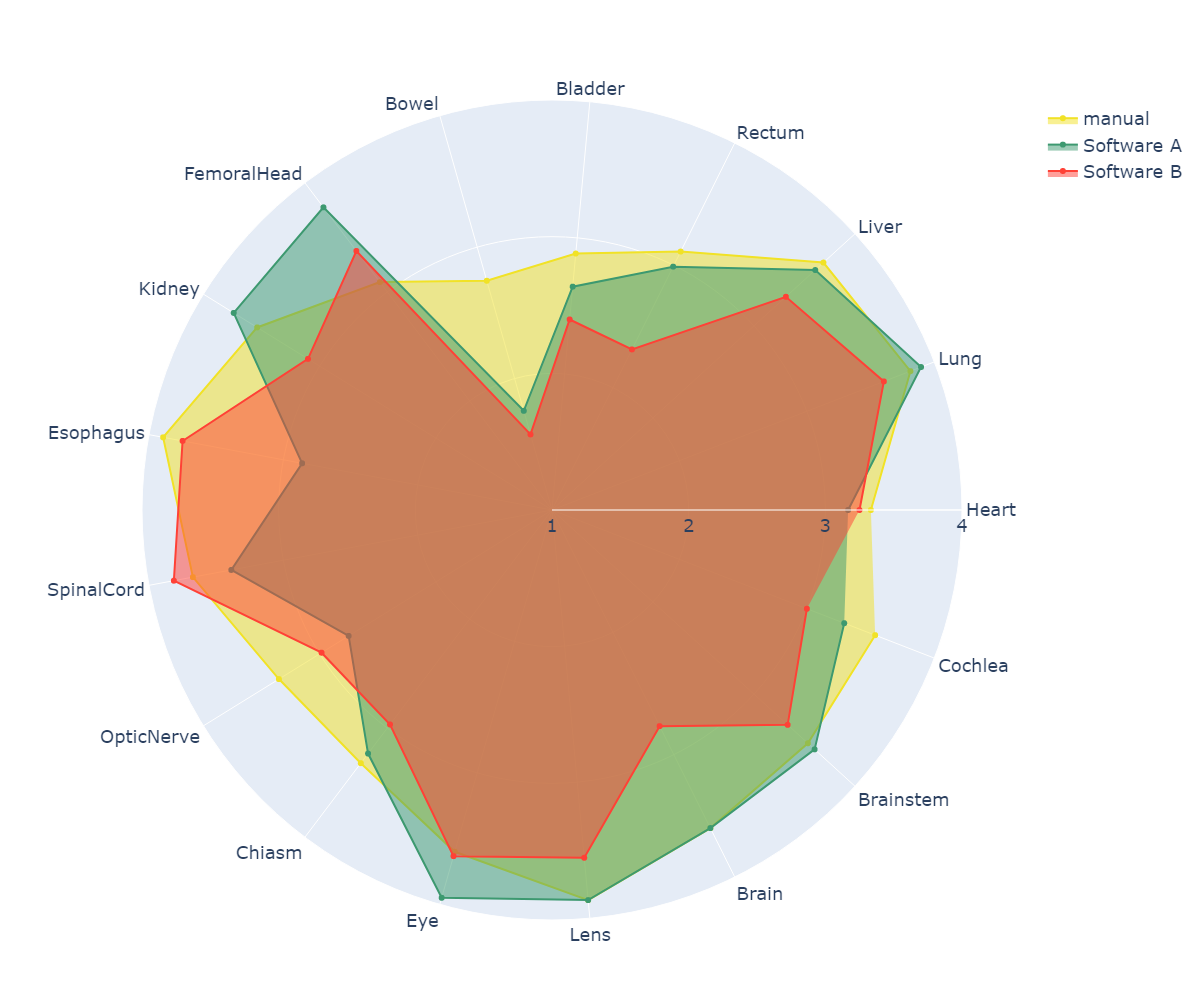

Contours of a total of 180 patients were evaluated with respect to more than 25 different OARs. The geometric evaluation yielded good results with a mean DSC above 0.9 for 29% of all OARS and 59% above 0.8. Few structures resulted in a poor geometric agreement with the benchmark structure (DSC<0.6): bowel, cochlea, chiasm, and larynx. There were minimal differences between the tested commercial tools (the mean DSC difference was 0.02). However, scores between software tools were significantly different in 29% of the OARs (bowel, brain, cochlea, eye, lens). Relative dosimetric differences were within 5% for the majority (92%) of OARs and were larger than 10% only for the heart and bowel. Statistical differences in relative dosimetric variations between software tools were observed in 20% of the OARs (bowel, lens, lung, rectum). The mean physician ratings were 3 or higher for all OARs except bowel, bladder, chiasm, and optic nerve (see Figure 1). The largest differences between the AI segmented and manually segmented OARs were due to differences between the clinical protocols used for OAR definition or CT resolution (4 mm slice thickness) in the case of small OARs. A comprehensive analysis of the correlation matrix between the different metrics suggested only a moderate correlation between the geometric metrics and the clinical acceptability (i.e., physician score). Hence, the geometric score should not be taken as a surrogate for clinical usability.

Conclusion

A comprehensive study was performed to select and implement commercially available auto-segmentation tools for OAR in all treatment sites in radiation oncology. Several difference metrics were compared, and as a result, the physician score was determined to be the most meaningful metric in determining the performance and usability of the software tools. The investigated AI-based segmentation algorithms need to be improved in the bowel region for clinical use.